There are many difficulties that a data scientist may face while managing an ML project. Some of these challenges include:

- Data availability and quality,

- Feature engineering,

- Model selection,

- Model tuning,

- Deployment and maintenance,

- Legal and ethical considerations

Let’s see these data-scientists’ challenges in more detail.

Data availability and quality

ML algorithms require large amounts of high-quality data to train on. However, it is often difficult to obtain clean and relevant data, which can hinder the performance of the model.

Data availability refers to the ease with which data can be obtained for a particular ML project. Obtaining high-quality data is often one of the most challenging and time-consuming aspects of an ML project. There are several reasons why data availability and quality can be a challenge:

- Limited data: In some cases, there may be very little data available for a particular problem. For example, consider a startup trying to build a recommendation system for a new online marketplace. If the marketplace is just starting out and has few users, it may be difficult to obtain sufficient data to train a reliable recommendation system.

- Inaccessible data: Even if the data exists, it may be difficult to obtain. For example, data may be stored in a proprietary format or held by a company that is unwilling to share it.

- Data quality: Even if data is available, it may not be of high quality. This can include issues such as missing values, incorrect or inconsistent labels, or data that is not representative of the problem at hand.

- Data privacy: In some cases, data may be sensitive and cannot be shared for legal or ethical reasons. For example, personal medical records cannot be shared without proper consent.

Ensuring that sufficient and high-quality data is available is crucial for the success of an ML project, as the performance of the ML model is directly related to the quality of the data it is trained on. If the data is of poor quality or is not representative of the problem at hand, the model is likely to perform poorly.

Feature engineering

Creating features that represent the data in a meaningful way is an important step in the ML process. However, this can be time-consuming and require domain expertise.

Feature engineering is the process of creating features from raw data that can be used to train ML models. It is a crucial step in the ML process, as the quality of the features can have a significant impact on the performance of the model. However, feature engineering can be a challenging task for several reasons:

- Domain expertise: Creating features that are relevant and meaningful for a particular problem often requires domain expertise. For example, a data scientist working on a healthcare problem may need to understand the medical context in order to create useful features.

- Time-consuming: Creating features can be a time-consuming process, especially if the data is large or complex. It may require significant preprocessing and cleaning, and the data scientist may need to experiment with different approaches to find the most effective features.

- Lack of guidance: There is often no clear guidance on how to create the best features for a particular problem, so the data scientist may need to try multiple approaches and use their own judgment to determine what works best.

- Curse of dimensionality: As the number of features increases, the amount of data needed to train the model effectively also increases. This can make it more difficult to train a model with many features, as it may require a larger dataset to achieve good performance.

Overall, feature engineering is a crucial but challenging aspect of the ML process, and it requires both domain expertise and creativity to create effective features.

Model selection

There are many different ML algorithms to choose from, and it is often not clear which one will work best for a given problem. This can require extensive experimentation.

Model selection refers to the process of choosing the best ML algorithm for a particular problem. This can be a challenging task for several reasons:

- There are many algorithms to choose from: There are many different ML algorithms available, and each one has its own strengths and weaknesses. It can be difficult to determine which algorithm will work best for a particular problem, and it may require significant experimentation to find the best one.

- Different algorithms work better for different types of data: Some algorithms are more suitable for certain types of data than others. For example, decision trees are a good choice for data with a categorical response, while linear regression is better for continuous responses.

- Algorithms may require different types of input: Some algorithms require that the input data be transformed in a particular way, such as scaling or normalization. This can make it more difficult to compare algorithms, as they may need to be tested on different versions of the input data.

- It can be difficult to determine the best hyperparameters: Each ML algorithm has a number of hyperparameters that need to be set in order to obtain good performance. It can be difficult to determine the optimal values for these hyperparameters, and it may require significant experimentation to find the best ones.

Overall, model selection is a crucial step in the ML process, but it can be challenging due to the large number of algorithms available and the need to determine which one will work best for a particular problem.

Model tuning

Even once an algorithm has been selected, there are often many hyperparameters that need to be tuned in order to obtain good performance.

Model tuning refers to the process of adjusting the hyperparameters of an ML model in order to obtain the best performance. Hyperparameters are values that are set prior to training the model and control the model’s behavior. Tuning the hyperparameters of a model can be challenging for several reasons:

- There are often many hyperparameters to tune: Some ML models have many hyperparameters that need to be set, and it can be difficult to determine the optimal values for all of them.

- It can be time-consuming: Tuning the hyperparameters of a model can be a time-consuming process, especially if the model has many hyperparameters or if the training process is slow.

- The optimal hyperparameters may depend on the specific problem: The optimal hyperparameters for a model may depend on the characteristics of the specific problem that the model is being used to solve. This can make it difficult to determine the best hyperparameters in advance.

- There may be trade-offs between hyperparameters: Adjusting one hyperparameter may improve the performance of the model in one way, but it may also have negative impacts on other aspects of the model’s performance. Finding the right balance between hyperparameters can be challenging.

Overall, model tuning is an important step in the ML process, but it can be challenging due to the large number of hyperparameters that need to be tuned and the time and resources required to do so.

Deployment and maintenance

ML models often require significant resources to train and serve, and they may need to be retrained as the data distribution changes over time.

Deploying and maintaining an ML model can be challenging for several reasons:

- Resource requirements: Training and serving an ML model can require significant computational resources. This can be a challenge if the model is large or if it needs to be served in real-time to many users.

- Integration with other systems: In many cases, an ML model will need to be integrated with other systems, such as databases or web applications. This can be a complex process that requires the data scientist to work with developers to ensure that the model is properly integrated and serving predictions as expected.

- Retraining: ML models may need to be retrained as the data distribution changes over time. For example, a model that is trained to classify images of animals may need to be retrained if it is later used to classify images of a new type of animal that it has not seen before. Retraining a model can be a time-consuming process, and it may require additional resources and data.

- Monitoring: It is important to regularly monitor the performance of an ML model to ensure that it is still working as expected. This can involve monitoring the model’s performance on new data, as well as monitoring the overall system to ensure that it is running smoothly.

Overall, deploying and maintaining an ML model requires careful planning and ongoing effort to ensure that it continues to perform well over time.

Legal and ethical considerations

ML projects can raise legal and ethical concerns, such as bias in the data or the potential for the model to be used in harmful ways. It is important for data scientists to be aware of these issues and address them appropriately.

Legal and ethical considerations can be a challenge in ML projects for several reasons:

- Data privacy: ML projects often involve working with sensitive data, such as personal information or medical records. It is important to ensure that this data is handled in accordance with relevant laws and regulations, such as the General Data Protection Regulation (GDPR) in the European Union or the California Consumer Privacy Act (CCPA) in the United States.

- Bias in data: ML models can sometimes perpetuate or amplify existing biases present in the data used to train them. For example, a model that is trained on data that is predominantly from a particular demographic group may not perform well on data from other groups. It is important to consider potential biases in the data and take steps to mitigate them.

- Fairness: ML models should be fair and unbiased in their predictions. For example, a model that is used to predict loan approval decisions should not discriminate against certain groups of people. Ensuring that ML models are fair can be a challenging task, as it may require carefully designing the model and the training data to avoid biases.

- Explainability: In many cases, it is important to be able to explain the decisions made by an ML model. This can be a challenge, as some ML models are difficult to interpret. Ensuring that ML models are explainable is important for accountability and transparency.

Overall, legal and ethical considerations are an important aspect of ML projects, and it is important for data scientists to be aware of these issues and address them appropriately.

Faster and optimized eCommerce websites do sell +40% more than slower ones, just because they are faster. You “will” agree once you see the money come ;) trust me.

Faster and optimized eCommerce websites do sell +40% more than slower ones, just because they are faster. You “will” agree once you see the money come ;) trust me.

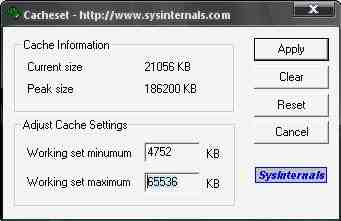

If you don’t know it already, CS-Cart runs Smarty for its template engine. Smarty is basically great from an ease of use point of view, but unfortunately, Smarty v2 is not optimized for speed. Well, Smarty did great on its Smarty v3 on speed optimization, and included multiple cache handlers like eAccelerator, APC and others.

If you don’t know it already, CS-Cart runs Smarty for its template engine. Smarty is basically great from an ease of use point of view, but unfortunately, Smarty v2 is not optimized for speed. Well, Smarty did great on its Smarty v3 on speed optimization, and included multiple cache handlers like eAccelerator, APC and others. I have to mention that APC caching improves A LOT your site performance, but, Smarty v2 does not contain an APC cache handler, so I made an APC cache handler for Smarty v2, and thus for CS-Cart 3, which makes your content load directly from “RAM” instead of Hard Drive. This means “very fast”.

I have to mention that APC caching improves A LOT your site performance, but, Smarty v2 does not contain an APC cache handler, so I made an APC cache handler for Smarty v2, and thus for CS-Cart 3, which makes your content load directly from “RAM” instead of Hard Drive. This means “very fast”.